Talking to myself using GPT

14 Jul 2021Transformer & attention models are all the hype in the machine learning community recently, so I took sometime to learn about them. As a fun project, I’ve decided to build a virtual version of myself (thanks to trove of dataset from Facebook messenger export).

Data preparation

The training data was prepared through downloading my entire Facebook chat history, and using a parsing script I wrote. There’s a couple of gotchas when it comes to parsing Facebook messages data:

- Ignore chat threads where most of the language is non-English (Korean is my first language).

- Remove automated messages (Eg: Words With Friends game requests).

- Combine consecutive messages into a single sentence.

- Set a threshold to yield a new context (I’ve chosen this as 50th percentile of message time delta).

Overall, the preparation pipeline looks as follows:

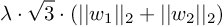

The context of the model is preceding 4 messages before my reply. 3 distractors are chosen from the sent replies in the current chat for the supervision task. I haven’t investigated the effects of these two parameter choices – tuning these context and distractor parameters could be an interesting study.

The final dataset yields 29192 lines and is 11MB. I’ve split as 25000 lines as training set and the rest 4192 lines as the validation set. This is a fraction of the amount of data the baseline model was trained on (DialoGPT was trained on 147M multi-turn dialogue from Reddit). Regardless, I found that I was able to get reasonable results with these small datasets.

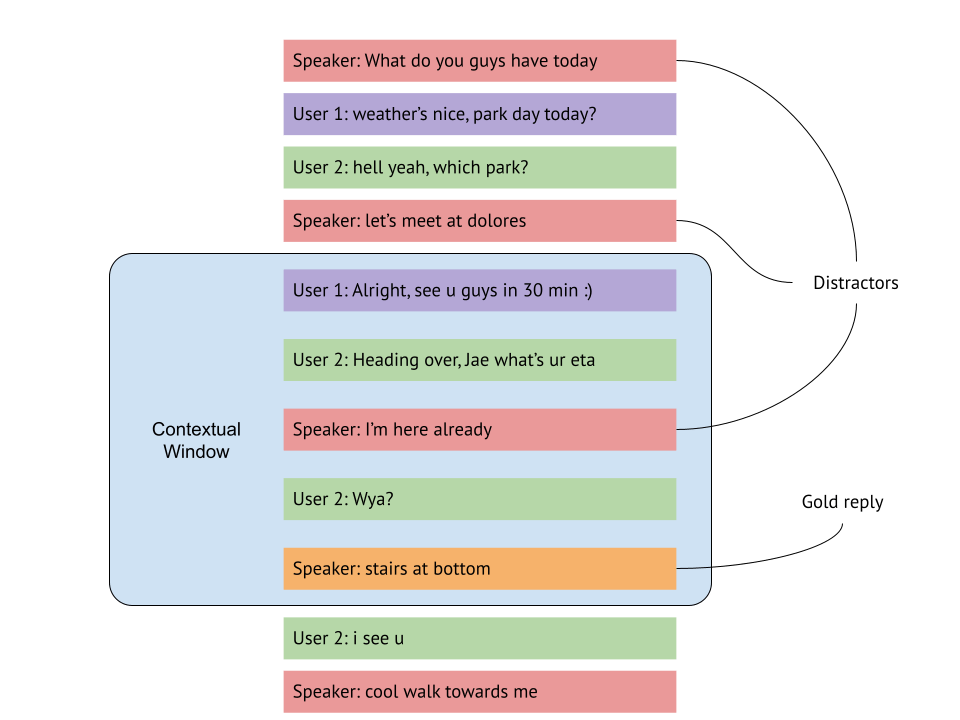

Here is the final objective distribution (for a reply of length  ):

):

Model architecture

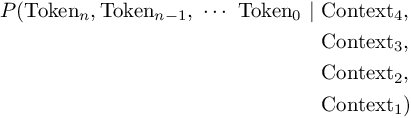

The architecture is taken directly from the HuggingFace’s ConvAI2 (NeurIPS 2018) winning model. The language modeling head is making the actual token predictions, with an extra next sentence classification head added on to the hidden states of the last token to discriminate between the correct reply and a negatively sampled reply. Refer to the linked blogpost and the code for details – the transformer network (and attention module) is a fascinating piece of work that deserves close… attention. For the sake of this post, you can think of the attention module as learning a masking function that focuses on each word in a given sentence (in a fill-in-the-blank manner).

It seems the discriminator head is designed to act as a weak supervision function to aid language modeling task (through cross learning), but I didn’t notice any noticable improvements to the main LM task. More details in the following sections.

Training implementation

I’m relying on HuggingFace’s Transformer library to train the model on Google Colab’s TPUs. I ran into several problems, which I mostly fixed by copy-paste engineering and customizing to suit my needs:

- Training on TPU (using pytorch/xla) requires fixed tensor sizes for speed up. This requires some additional care on preprocessing the data part (see block size optimization on parameter tuning section below). I assume this is due to TPU internally optimizing the memory layout.

- HuggingFace’s Trainer (as of v4.8.2) does not support exporting multiple losses. The final optimization loss is the weighted sum of two tasks: language modeling and next sentence classification, but for final evaluation we only care about the language modeling task. A quick hack to report all three loss is in the code implementation here.

- DialoGPT was trained without using token IDs, as a special boolean mask to indicate whether a sentence is a reply or not.

The following sections describe the parameter tuning explorations.

Block size

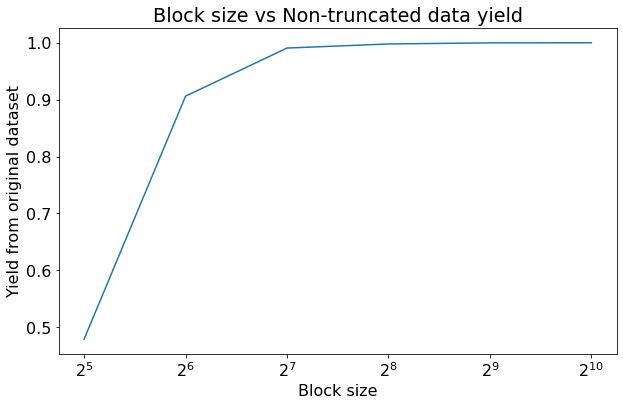

This is to resolve the fixed tensor requirement with the TPU above. I wrote a script to calculate percentage of training rows that go above limit at each tokenization step. From the chart below, it seems we get a 99% yield with block size of  .

.

I’ve updated the data processing step to pad the data to become a fixed width of 128 and ignore rows that overflow – we are good to go.

Epoch

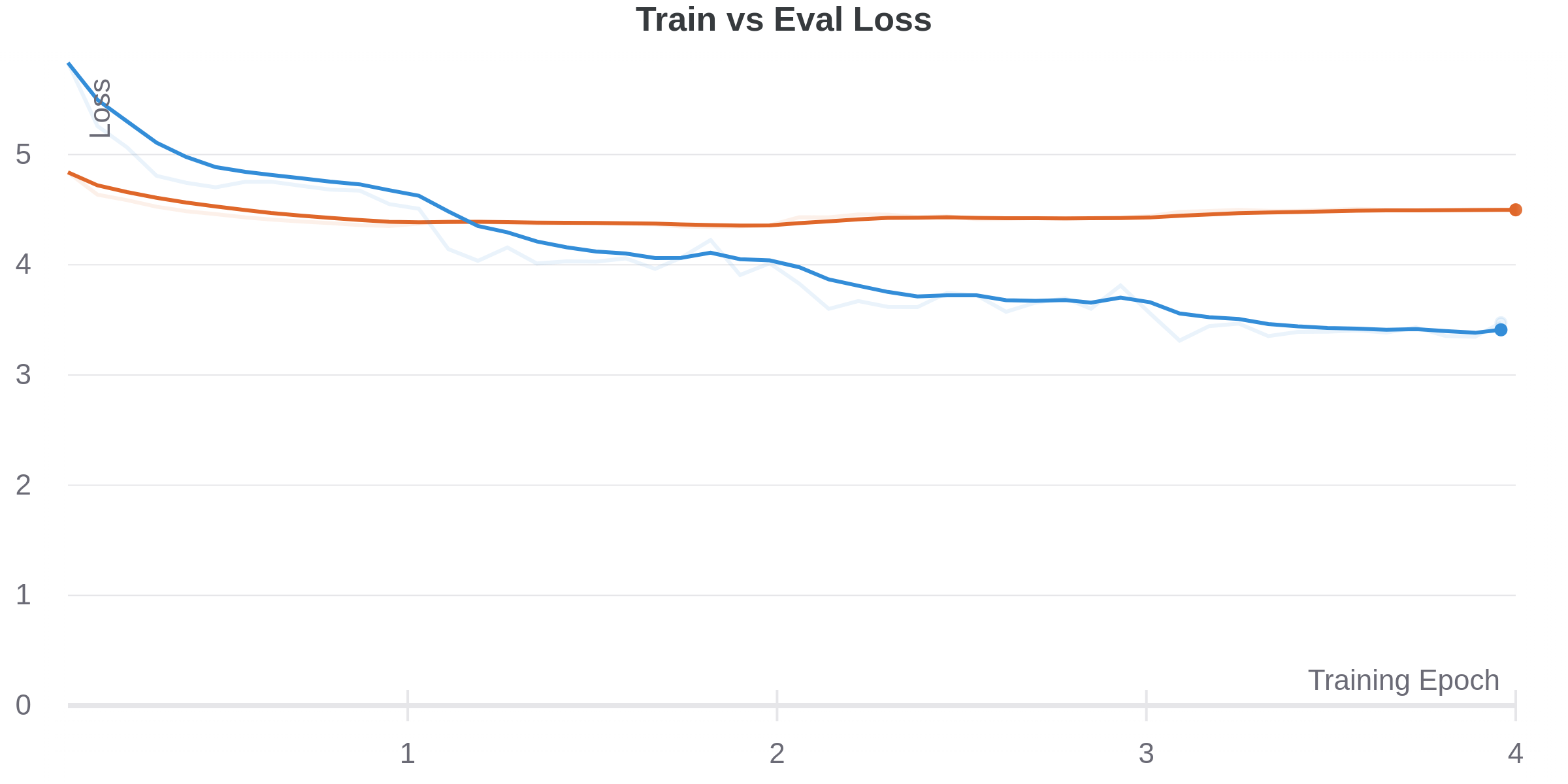

I kicked off an exploratory run with epoch of 4 to see if multi-epoch training is even reasonable. Small dataset, large model size and sparse tokens seems like a recipe for overfitting, so I chose a batch size of 1 per TPU (which gives us an update batch size of 8, as there are 8 TPU cores). Next sentence prediction task was not used in this run.

We seem to overfit after 1 epoch, with eval loss increasing after 2 epoch.

Task weight exploration

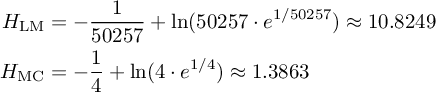

For a baseline, below is the entropy of a background predictors that generate a random choice for the two tasks. The DialoGPT tokenizer contains 50257 tokens, and we choose 3 distractors for the next sentence prediction tasks.

The background entropy for language modeling task is 7.8 times higher than next sentence prediction! A 50% reduction in the classification task would be considered only a 7% reduction in the language modeling task in the final loss function.

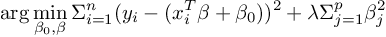

The final loss function is formulaized as

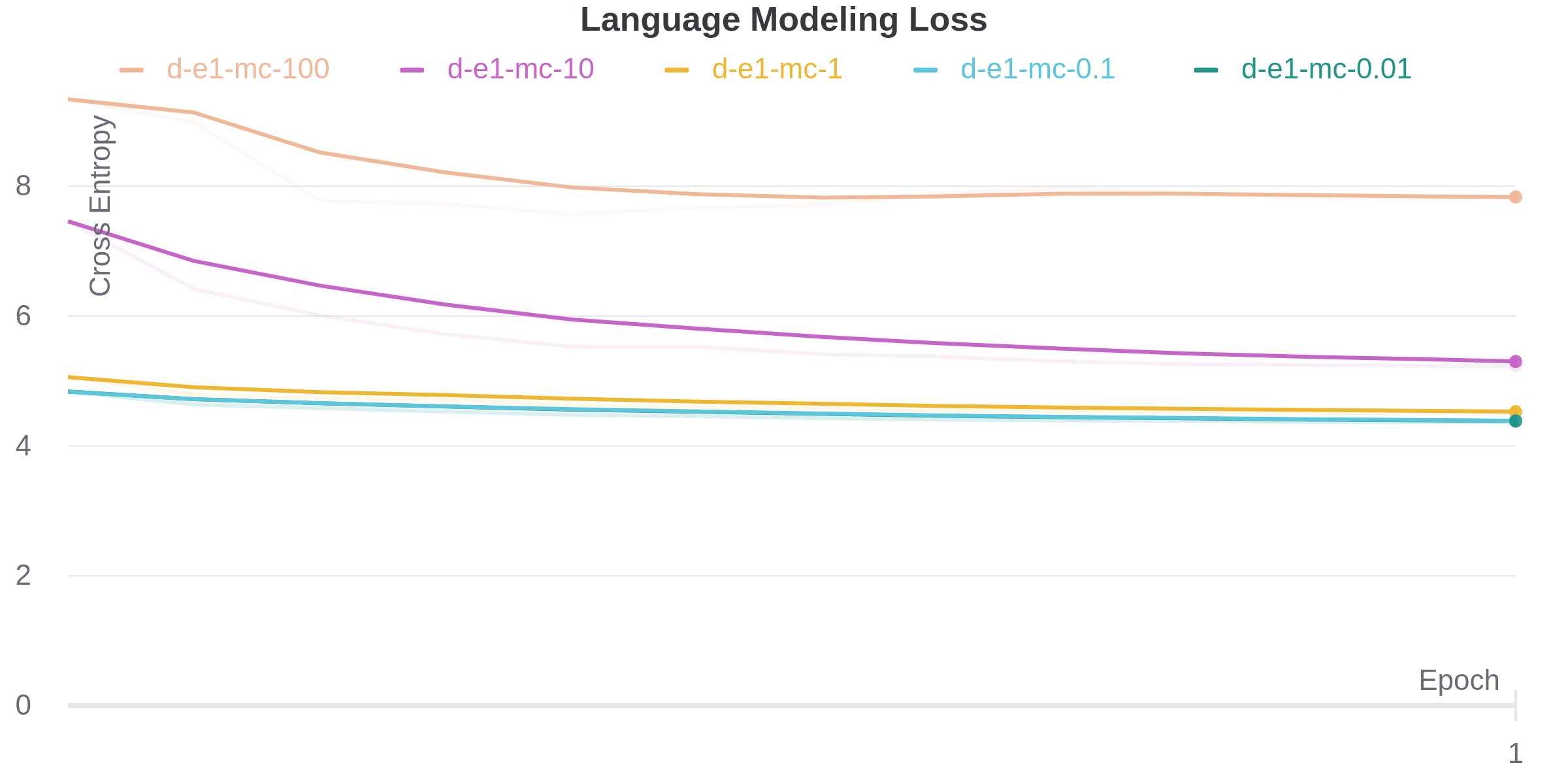

To verify the potential improvements, I tuned the  in the combined loss to the following values. Reported metrics are eval metrics.

in the combined loss to the following values. Reported metrics are eval metrics.

|  |  |  |  |  |

|---|---|---|---|---|---|

| 100 | 1.819 | 1.312 | 7.814 | 0.722 | 0.0014 |

| 10 | 1.328 | 0.958 | 5.229 | 0.483 | 0.0012 |

| 1 | 1.063 | 0.767 | 4.503 | 0.415 | 0.0002 |

| 0.1 | 1.325 | 0.956 | 4.365 | 0.403 | 0.0002 |

| 0.01 | 1.386 | 0.999 | 4.361 | 0.402 | 0.0001 |

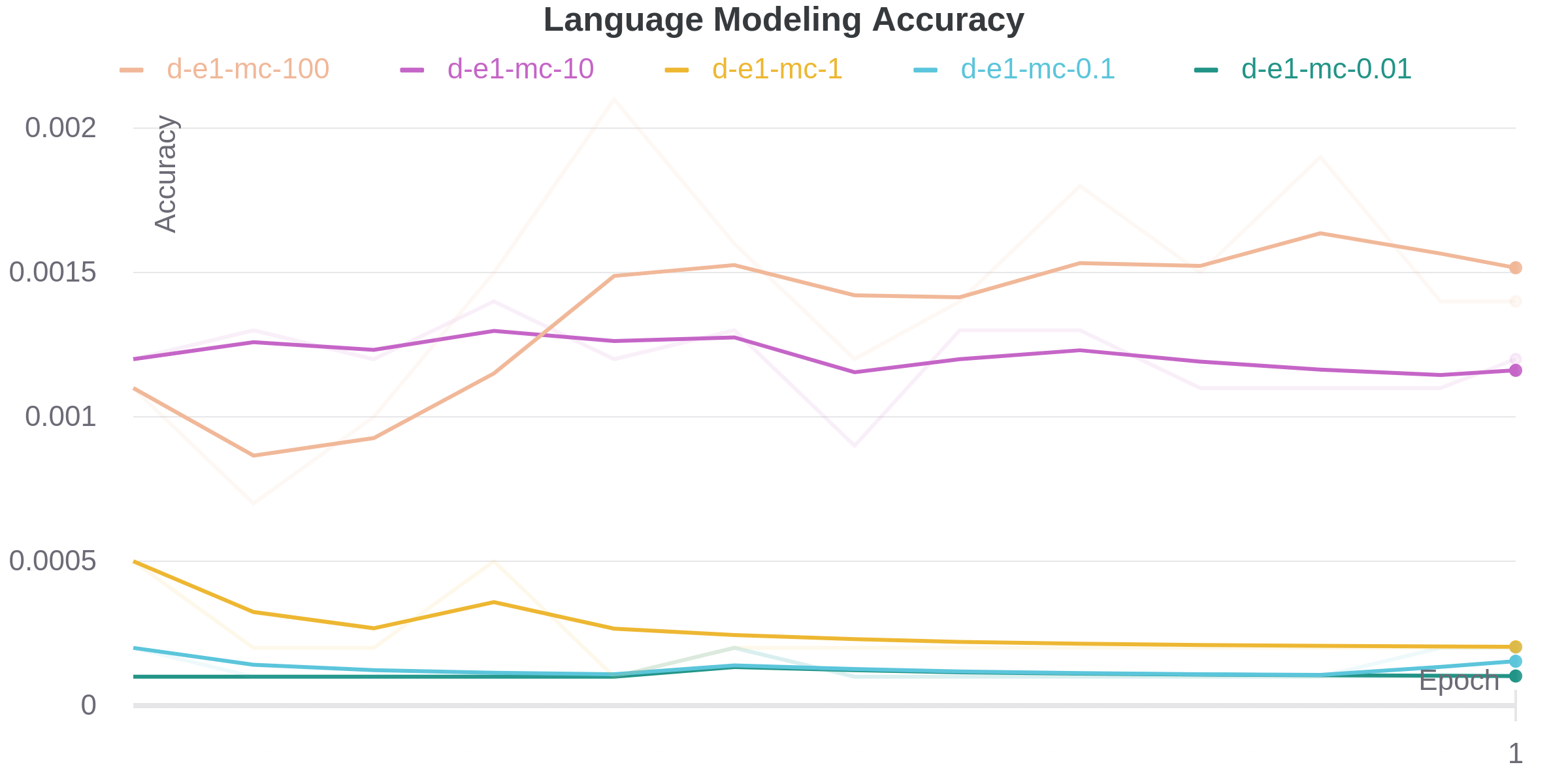

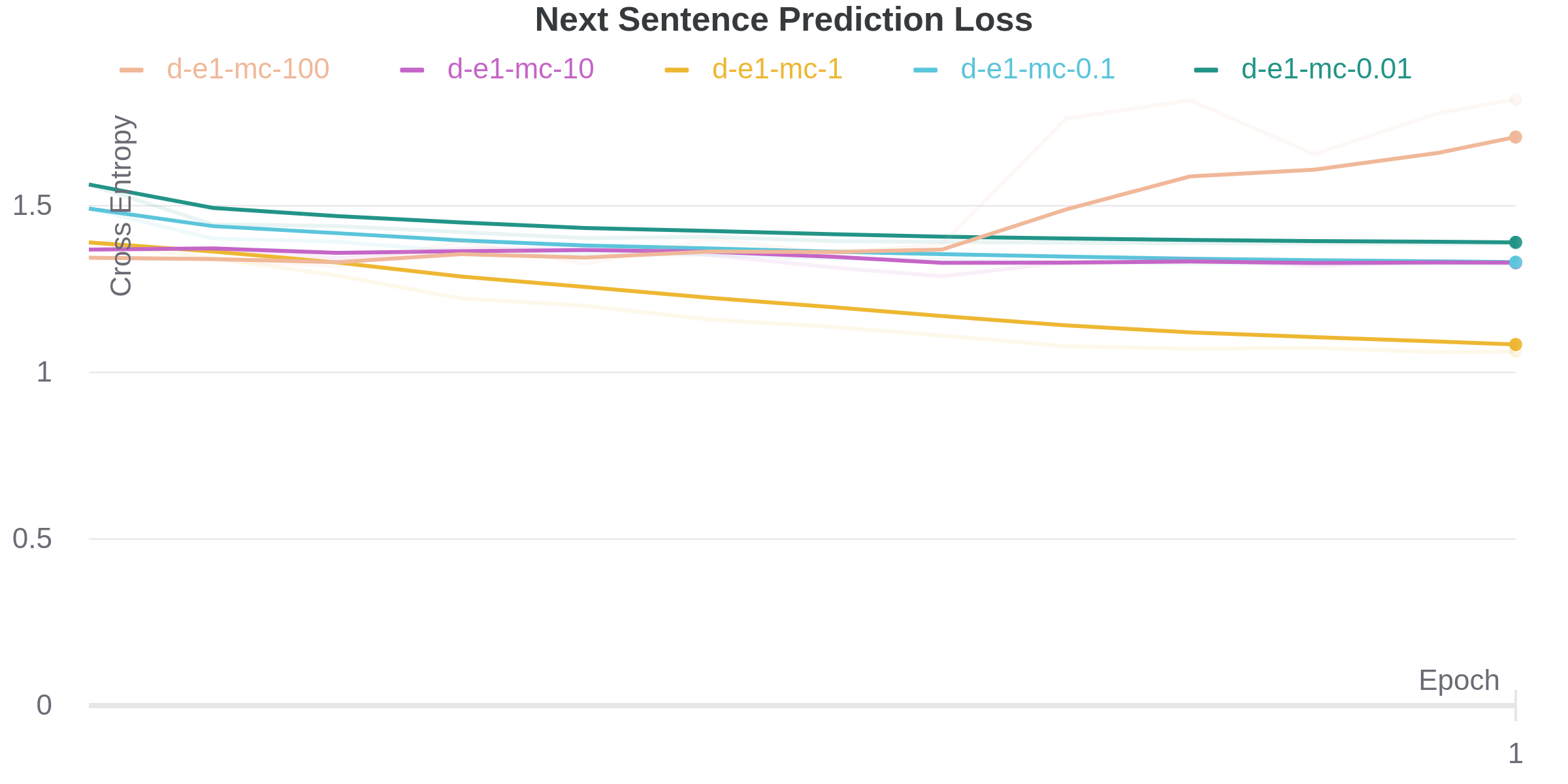

And their corresponding learning curves:

Using the supervision tasks shows minimal improvement to the main LM loss; rather makes it worse.

In constract to  , the inclusion of supervision tasks increase the LM modeling accuracy. However this isn’t a good metric to optimize for, as we will be using beam search to generate responses through sampling; learning an accurate distribution of next token is more important than predicting the most likely token.

, the inclusion of supervision tasks increase the LM modeling accuracy. However this isn’t a good metric to optimize for, as we will be using beam search to generate responses through sampling; learning an accurate distribution of next token is more important than predicting the most likely token.

Increasing the  parameter does not result in linear increase to MC loss, with

parameter does not result in linear increase to MC loss, with  showing the best result. In fact, a multiplier of 100 shows a worse result than random guess! I couldn’t think of a reasonable explanation for this; my guess is it has to do with steep gradient magnitudes with higher

showing the best result. In fact, a multiplier of 100 shows a worse result than random guess! I couldn’t think of a reasonable explanation for this; my guess is it has to do with steep gradient magnitudes with higher  ; plotting magnitude of gradient might give us a better idea here.

; plotting magnitude of gradient might give us a better idea here.

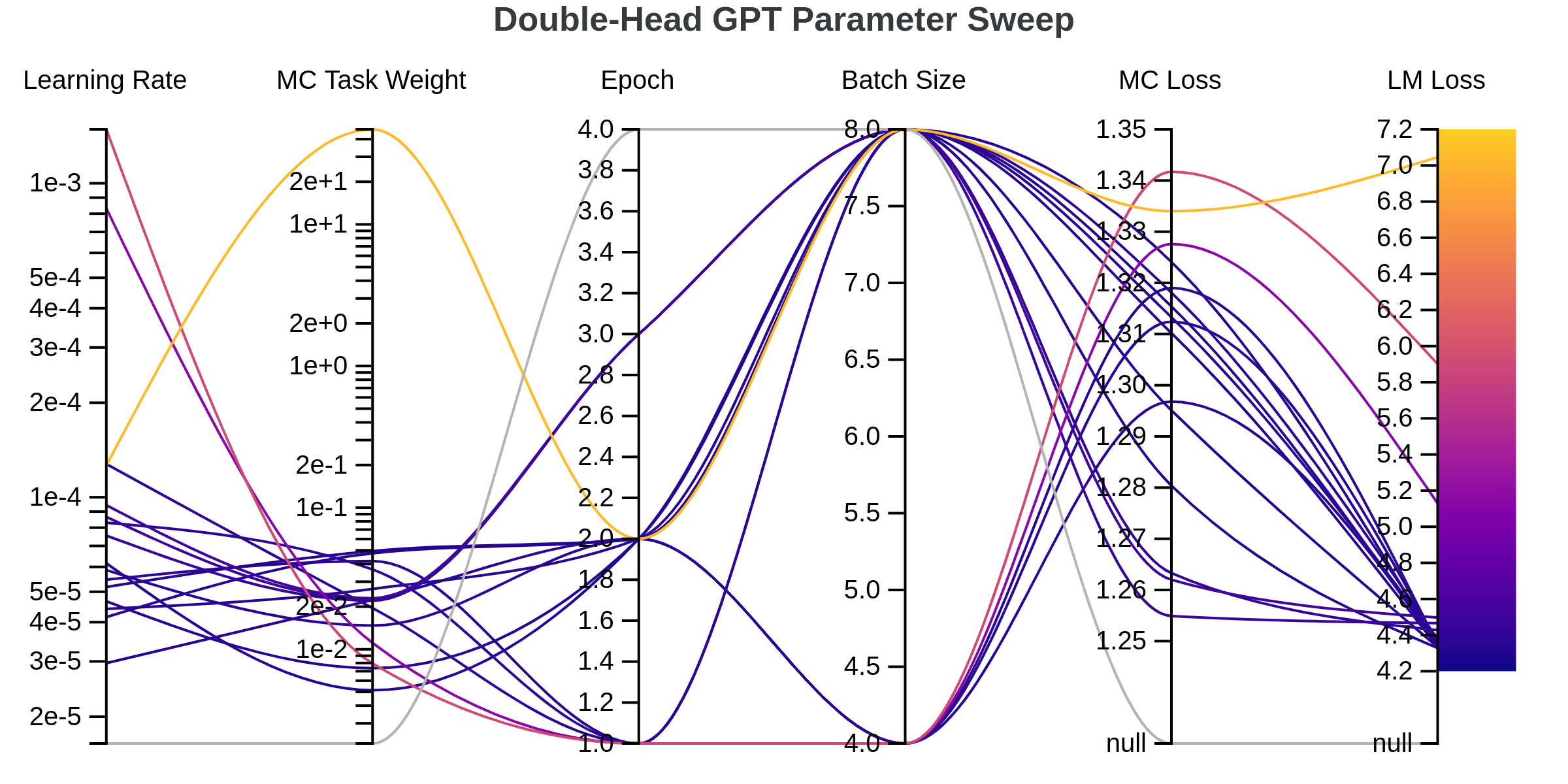

Bayes hyperparameter optimization

We did some initial exploration of model architectures; now let’s systematically explore the entire search space to get the best candidate. To do this, we utilize Weights & Biases’ excellent sweep utility, with the config tuned to run various configurations. The sweep algorithm is very simple, it fits a gaussian process regressor on parameter and samples from the trained distribution.

The results of the runs are listed below:

| Learning Rate | MC Task Weight | Epoch | Batch Size | MC Loss | LM Loss |

|---|---|---|---|---|---|

| 5.18e-05 | 0.0493938 | 2 | 8 | 1.2804 | 4.3287 |

| 4.15e-05 | 0.0475979 | 2 | 8 | 1.2951 | 4.3339 |

| 4.41e-05 | 0.026687 | 2 | 8 | 1.3102 | 4.3351 |

| 6.16e-05 | 0.0051356 | 2 | 8 | 1.3154 | 4.3364 |

| 4.66e-05 | 0.0073607 | 2 | 8 | 1.3241 | 4.3367 |

| 2.96e-05 | 0.0223147 | 2 | 4 | 1.319 | 4.3451 |

| 5.86e-05 | 0.0147082 | 2 | 4 | 1.2968 | 4.3544 |

| 5.46e-05 | 0.0419673 | 1 | 4 | 1.3124 | 4.3571 |

| 0.0001275 | 0.0195402 | 1 | 8 | 1.3133 | 4.3706 |

| 8.3e-05 | 0.0365972 | 1 | 8 | 1.3181 | 4.3762 |

| 7.55e-05 | 0.0220401 | 3 | 8 | 1.2633 | 4.4274 |

| 8.66e-05 | 0.022435 | 3 | 8 | 1.2549 | 4.4661 |

| 9.43e-05 | 0.0229404 | 3 | 8 | 1.262 | 4.4974 |

| 0.0008321 | 0.011086 | 1 | 4 | 1.3276 | 5.1287 |

| 0.0014851 | 0.0079413 | 1 | 4 | 1.3417 | 5.9006 |

| 0.0001262 | 46.8726004 | 2 | 8 | 1.334 | 7.0459 |

I’ve kicked off a final training run with all data with the following parameters:

Conversation examples

I’ve generated some samples using the generator script in the repository. It relies on HuggingFace’s implementation of beam search, where a token is sampled from the predicted distribution of tokens (with previous tokens as the prior). After some trial and error I’ve arrived at  (filter out tokens where probability is < 0.25, and choose top 100 tokens).

(filter out tokens where probability is < 0.25, and choose top 100 tokens).

Jae-GPT on his hobbies.

>>> What hobbies do you enjoy in your free time

JaeGPT: I'm not sure what I enjoy in free time, but I'm not really into hip hop or

>>> Are you actively working on developing your hobbies

JaeGPT: I like to listen to music and play video games, but also like to play video game games and

>>> Which video games?

JaeGPT: I'm working on a game called Minecraft that's pretty fun and addictive, but it's not optimized

>>> You're trying to create Minecraft

JaeGPT: I don't think Minecraft is a good idea for a first time user. It's a lot of

Jae-GPT on collecting wrist watches.

>>> Do you like collecting wristwatches

JaeGPT: I don't like watches but I like watches that look cool and I like the design of them and

>>> Which watch do you think looks the coolest

JaeGPT: I like watches with a nice design and a good finish but I don't really like watches as a

>>> as a tool?

JaeGPT: I think it looks pretty cool but I think it's too much work for me to wear everyday lol

>>> I like to wear my nomos everyday

JaeGPT: i don't like watches that's too formal but i like watches for everyday wear so i'll probably

>>> wear an apple watch?

JaeGPT: I'm thinking of buying a watch with a strap that's more comfortable to wear on the wrist and

Jae-GPT on discussing the stock market.

>>> Should I buy some options on Robinhood

JaeGPT: What's Robinhood? I'm not sure if it's a mutual fund or not lololol

>>> It's a brokerage

JaeGPT: I'm not a big investor in Robinhood but I'm a big fan of the company and I

>>> Are you bullish or bearish on Robinhood

JaeGPT: I think I'm bullish on Robin hood but I think I'll be bullish on Amazon and Google too

>>> So you like all the tech stocks

JaeGPT: I'm bullish for Robinhood too but I don't think I can get into FB or Google lol

>>> But you work for Facebook

JaeGPT: I think you can get a job at Google or Amazon too if you're a tech company and you

Jae-GPT on wealth accumulation.

>>> What kind of life do you want to live?

JaeGPT: I want to be a billionaire but I don't want to go back to school and live a life

>>> It's not easy to become a billionaire.

JaeGPT: I'm not sure if I can do that But I'm willing to try my best to make it

>>> Get rich or die trying

JaeGPT: I want a billionaire to live a long life so I can be a millionaire again and live the rest

>>> You're going to become a millionaire from a billionaire?

JaeGPT: I'm going to be a billionaire from a millionaire I'm going into a billionaire I'm not going

Jae-GPT on living in Korea.

>>> Would you ever move back to Korea?

JaeGPT: I'm not sure, I'm not a big fan of the culture there, I think I'll

>>> What don't you like about the culture?

JaeGPT: The culture is pretty chill, but I'm kinda scared of the people I met there lol. I

>>> You are scared of Koreans?

JaeGPT: I'm scared of Asians too. I'm scared to be interviewed there lolololol. I

>>> That's interesting since you are Korean.

JaeGPT: I don't think I'll be applying to any of the big tech companies in Korea lol. They

Jae-GPT on his delusions on the housing market.

>>> Got any good stock picks?

JaeGPT: I'm thinking of buying a house in SF for $200, but I'm not sure yet.

>>> Wow! That's an amazing deal. Where?

JaeGPT: San Mateo! It's a pretty small place but I think it's worth it. I'm

>>> $200! Are you sure it's not a cardboard box on the streets?

JaeGPT: I'm not too sure, I'll ask my friend who's living in SF and ask if he

>>> You should do more background research.

JaeGPT: I've been thinking about getting a job at a startup in SF for the past couple of years.

>>> Are you sure that will pay for the house?

JaeGPT: I'm thinking of moving to SF, but I'm not sure if I can get a job there

Jae-GPT on gaming PC.

>>> Is a gaming PC worth it

JaeGPT: I don't think so But I'm not sure if it's worth it to buy a gaming computer

>>> But you can play TF2 on full options

JaeGPT: I'm not buying a gaming pc for gaming lol I'm buying a laptop for gaming and gaming lol

>>> Laptop for gaming sucks

JaeGPT: I have a gaming PC and I can play tf2 on it lol I can't play TF1

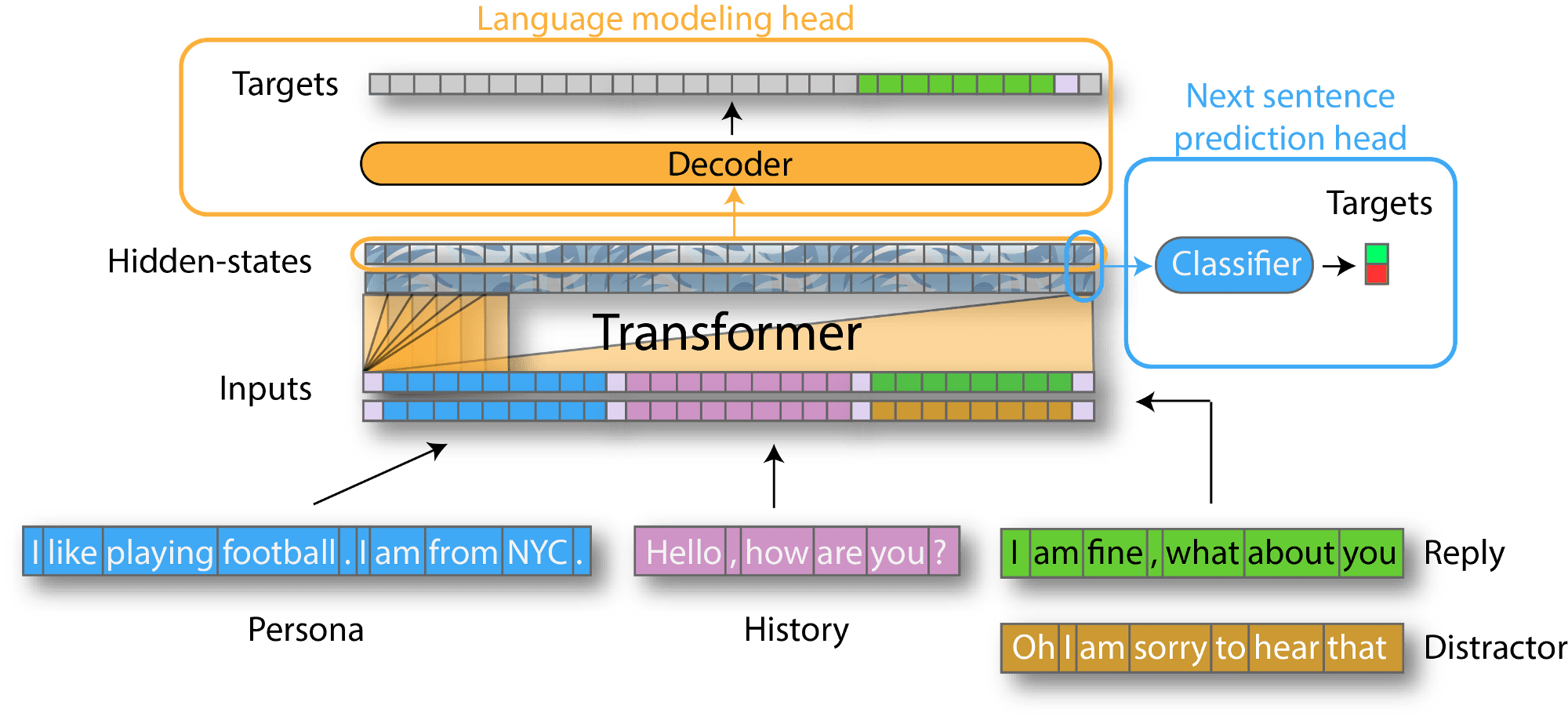

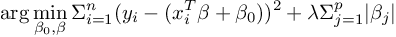

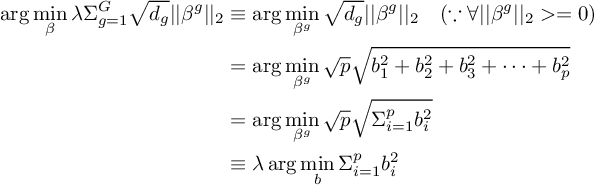

for LASSO

for LASSO for ridge

for ridge . The penalty for having one skewed large value is much greater for ridge regression. Ridge regularization aims to reduce variance between the coefficients, therefore driving all features down to zero.

. The penalty for having one skewed large value is much greater for ridge regression. Ridge regularization aims to reduce variance between the coefficients, therefore driving all features down to zero.

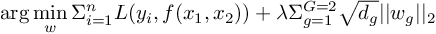

denotes the size of the group.

denotes the size of the group. denotes the L2-norm of the feature group

denotes the L2-norm of the feature group  .

. .

. , and we for some

, and we for some  that satisfies

that satisfies  , we could effectively rewrite the equation as

, we could effectively rewrite the equation as

, the regularization term can be expressed as

, the regularization term can be expressed as

, the regularization term essentially becomes a LASSO (L1) regularization.

, the regularization term essentially becomes a LASSO (L1) regularization. , the regularization term essentially becomes a ridge (L2) regularization.

, the regularization term essentially becomes a ridge (L2) regularization.

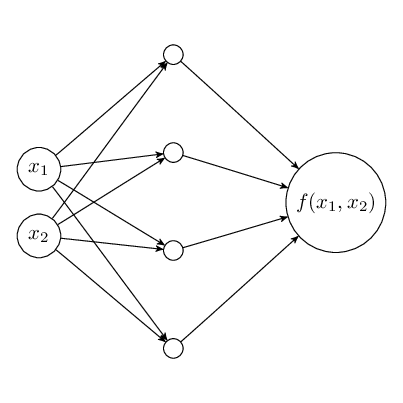

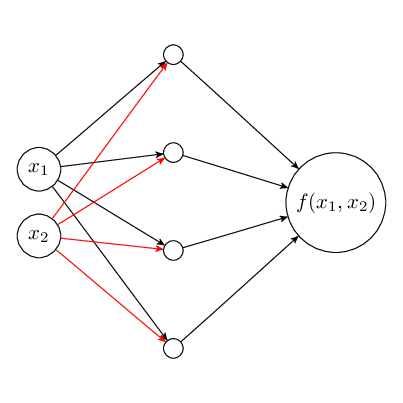

(marked in red).

(marked in red).

and

and  denote the weight vectors for input features

denote the weight vectors for input features  and

and

denotes the loss function and

denotes the loss function and  denotes the full-connected weights to feature

denotes the full-connected weights to feature  . Since we have two input features, the regularization term would also expand to

. Since we have two input features, the regularization term would also expand to